Notes on Vendor Neutral Observability Instrumentation

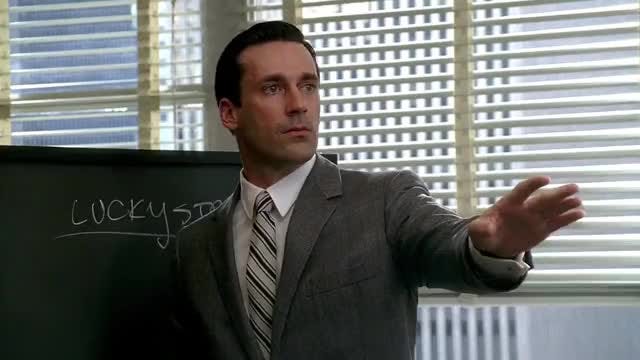

Don: There you go. There you go. [He writes: "Lucky Strike. It's Toasted."] Lee Garner, Jr.: But everybody else’s tobacco is toasted. Don: No, everybody else’s tobacco is poisonous. Lucky Strike’s is toasted.

I’d like to discuss the benefits and limitations of Vendor Neutral Observability Instrumentation. I believe that discussion around “Vendor Neutral”, or “Vendor Agnostic”, in the context of Observability has become a sales fueled oversimplification, framed as a silver bullet that solves Vendor Lock-In, rather than a nuanced topic with real tradeoffs. Whether the tradeoffs are worthwhile depends largely on the end user themselves, their needs, and their expectations.

This oversimplification is typical of Observability sales. A term like Vendor Neutral starts as technical jargon, in this case something used to describe Open Source projects like OpenTelemetry, or Vector, or Prometheus, even Fluentbit or Fluentd. It gains some mindshare, getting bubbled up through Feature Requests and meetings. Then, because it's the holy trinity of technical, popular, and new (“a glittering lure”, as Don Draper says), inevitably it gets seized upon by the relentless vampire squid that is sales and marketing.

And in their hands the term’s original meaning get shoved through a Capitalism Rube Goldberg of SEO and Webinar Titles and Discovery Cold Calls and targeted Instagram ads and Product Demos at the little conference booths where they scan your badge when you ask for socks, and so on and so on until the original meaning is unrecognizable, a death of the author, and all that's left is a sort've buzzword signifier that ends up on RFPs as a box to be checked.

I see that happening with ideas like Vendor Neutral, and that worries me, because it elides a real understanding of the pros and cons. While I doubt that my little substack will change that trajectory, sometimes you just have to throw your egg at the wall and let it break, to borrow Murakami.

So let's crack open this egg and start with the good stuff. What are the realistic benefits of Vendor Neutral Observability Instrumentation?

Standards. Vendor Neutral Observability instrumentation usually conforms to a well documented specification. This means that for Brownfield deployments, end users won't have to rip and replace their vendor specific instrumentation code every single time they switch providers. On top of that, adoption of Vendor Neutral standards by cloud providers, libraries, and frameworks that end users build on top of means that it's more likely for native support to simply exist out of the box for those users. On a long enough timeline, this all means fewer developer cycles spent diving into various repositories and switching out SDKs and re-configuring everything and monkey patching critical frameworks and so on.

Free Trials. The standard specification provides mutual benefits to users and vendors alike. Those two-week free trials where users waste half the time just setting up the instrumentation can be a thing of the past, giving end users more time to focus on specific vendor features. Likewise, vendors can allocate fewer resources to field and sales engineers just to help debug and nudge along the installation and instrumentation process for data collection. Users can just simply add another entry to some config and begin testing a different vendor platform.

Experimentation. This aforementioned standardization means that there's a foundation to experiment and build new tools. If a vendor of a particular point solution (say, logging) wants to expand into other tools (say, tracing), they don't have to think about naming and terminology and maintaining new SDKs and so on, they can simply focus on the backend feature set they want to provide and rely on the vendor neutral instrumentation to collect the data that powers it. Likewise, end users who want to build and experiment on top of Vendor Neutral tools can do so with confidence that those experiments have a long tail, learnings they can even take with them to any future role, and also a deep pool of engineers who can jump in and pickup that work internally.

Resiliency. Observability Tooling is not Slack or Jira or one of the many other SaaS tools that Engineers use to do their job, where it's relatively common to build and integrate in a way that ties you inextricably to a particular vendor. Observability instrumentation emits data that's critical for not just monitoring and alerting on infrastructure health and code deployments, but also quickly resolving incidents when they do arise. Without it many companies will simply grind to a halt, stopping deploys, pausing rollouts. Not knowing if there's an outage is nearly as bad as having an outage. From a pure resiliency POV, having observability instrumentation that generates portable data means that end users have a realistic failover plan to send information to a backup provider or an OSS stack they manage, should a current vendor or backend have a significant outage.

Those are real concrete benefits that users can reliably expect to get anywhere they use Vendor Neutral Observability Instrumentation. I think those benefits speak for themselves and are incredibly valuable.

But I also think there are limitations. Vendor Neutral is not a magic bullet, despite what some sales folks will tell you, but instead an option to consider with tradeoffs and limitations. And I don’t see people discussing those tradeoffs and limitations, so I’d like to enumerate them.

No Free Lunch. Switching to Vendor Neutral Observability Instrumentation does have a price. If a user wants to cutover, in the short term they'll still have to pay down a one time cost of installing and configuring new SDKs, even if they're not using a new vendor. Any alerts and queries based on previous standards will have to be updated to point at the new attributes and labels that the specification’s semantic convention deems important. And although the instrumentation is vendor neutral, there's still plenty of work to do if an end user does completely switch vendors, painstakingly migrating dashboards and alerts to reflect the new vendor's backend, updating playbooks to reflect the new vendor features. On top of that, all the tribal knowledge of previous vendor tools has to be re-learned for the new vendor's tools, and any data with long term trends (like most relevant infrastructure metrics data) will be lost when leaving the old vendor, as most vendors don't allow users to backfill data multiple days into the past.

A Platform. Vendor Neutral Observability does not guarantee a unified platform. Sure, there is a happy path for tight correlations between traces, metrics, and logs (at time of writing) . But most Vendors have a platform much wider than point solutions for those three signals, whether that's Synthetic Tests, RUM Session Replay, SIEM, Database Monitoring, CI/CD tooling, Error Tracking, an Incident Management Tool, and so on. Most of these platform features are not covered by the specification of Vendor Neutral Observability Instrumentation, and so users are still left to the whims of the vendor, or forced to manage these platform connections and correlations themselves, if they want to use the full feature-set of their vendor.

Cutting Edge Tools. Vendor Neutral Observability Instrumentation doesn't provide users the bleeding edge of observability tooling. This is largely by intention. Enforcing a specification means that there has to be consensus around what makes it into the specification. That consensus takes time to build, with even critical features like, say, a Histogram format, or Sampling propagation, taking years to be accepted into a vendor neutral specification. The saying "If you want to go fast go alone, if you want to go far go together" cuts both ways, and sometimes a user (or a vendor) just wants to go fast and doesn't care about interoperability. In those cases they'll have to look outside of Vendor Neutral Observability Instrumentation.

Cost Control. Last, and most important, Vendor Neutral Observability Instrumentation won't magically cut costs. I think this is the most important misconception to stop and think about. Because, let's take a step back. Those benefits I mentioned above are real and appealing, but I do wonder, is *that* why end users are so interested in Vendor Neutral Observability Instrumentation? For some, especially power users, maybe. But the underlying motivation for the large majority is pretty obvious to anyone who has ever read a reddit post or twitter thread reply or message board discussion about observability tools, which is this: they’re very expensive and the costs are unexpected .

Some of that cost confusion is the vendors fault. The ability to manage costs at observability vendors is often a second class feature, surprise spikes in billing are common, and negotiations of enterprise contracts are opaque. Even at vendors with better pricing transparency, users often have to tradeoff against heavy handed sampling, rate limiting, or retention periods which are geared toward protecting vendor backends rather than enable user behaviors. This is all pretty explicitly by design. Observability vendors are so richly valued largely because their Net Revenue Retention rates are so freakishly high, often over 130% for customers, meaning that the average customer is not just sticky and spending as much as they have previously, they're spending 30% or 40% more every year than what they spent the year before.

Some of that growth is organic; new teams, environments, new SKUs and products and so on. The classic "Land and Expand" playbook. But some of that growth is from anti-patterns. Explosions in cardinality on metric series because users need the additional dimensions to accomplish the task that looked "as simple as point and click" in the demo. Inability to filter and sample only the metrics and traces and logs they need, inflexibility with retention. Features priced assuming 24/7 usage but only needed once or twice a month. The list goes on. The focus of Observability sales is, by design, upselling, rather than helping users achieve observability and pair down the data they emit into a "single braid of structured data".

And blame also lays at the feet of users. It's all too common for users to simply flip on the "ALL” setting and emit a firehose of data across multiple Observability Signals. Users can't expect both a delightful out of the box experience, and a frugal, carefully curated one. If users are collecting the same error and stacktrace via a span event, a RED metric, a log, and an error tracking Event, they should expect to be billed for it 4 different times. It's up to them to take the time and curate the amount of data they emit and how they emit it.

While Vendor Neutral Observability Instrumentation may provide useful primitives for controlling these costs, simply having instrumentation that makes it easier to switch vendors will not make it cheaper. The cycles still must be spent by users on understanding, configuring, and applying the instrumentation appropriately. And likewise, while many Vendors may support Vendor Neutral Instrumentation, their core business models, ethics, and cost control posture are still going to be the paramount consideration for users to consider if they want to reduce their Observability costs, regardless of how much cheaper some special discount or pricing structure looks from afar.

Anyway, that’s how I see the world of Vendor Neutral Observability Instrumentation at the moment. There’s a lot to like and some tradeoffs to consider. As always, I am not the cosmos, just an IC who has opinions, but I hope you’ll take my opinions into consideration as folks try to sift through the sales and content marketing floating around Observability.

Thanks for reading,

Eric Mustin

Great thoughts, thanks for making the time to share them.